Back in last year August we started getting an odd error from the couch-backup lambda. Out of nowhere we started getting error messages like the below.

1 | { |

Looking through our code we don’t directly open any files or sockets which would cause this type of issue.

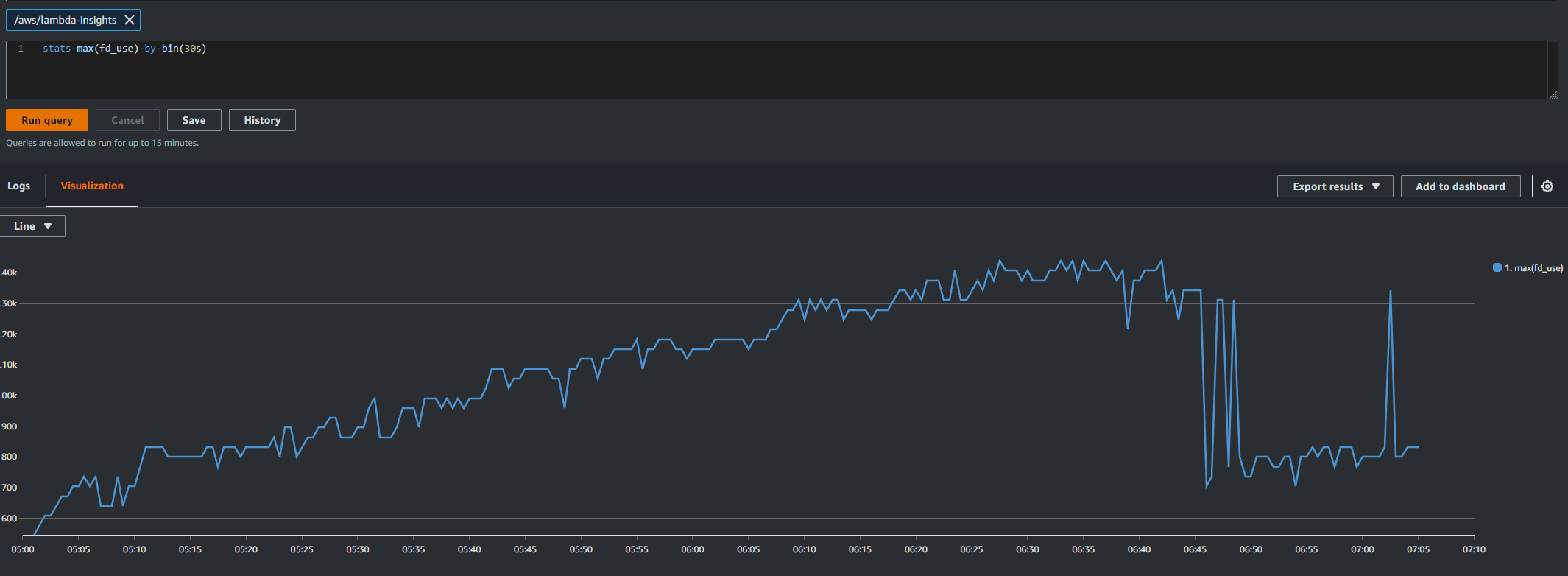

We could see that file descriptors where being left open using Lambda Insights.

So, we Googled to see if anyone else had this issue and discovered this blog. It suggested adding a node package called wtfnode wtfnode - npm (npmjs.com). Unfortunately, this didn’t seem to work, the author of the package does say that this package is using undocumented Node apis and could break without warning.

After a few more weeks of Googling, I came across this package samswen/lambda-emfiles: solve node AWS lambda EMFILE issue (github.com). This package handles the EMFILE issue a different way, it causes the Lambda process to exit with a status code of 1. This means that lambda throws away the current lambda environment and starts a new one. While it didn’t fix the issue it meant that we didn’t get the retries when a process ran out of file descriptors.

I did a little poking around in the source code of this package to see how it was working out the number of open file descriptors. What I found was

1 | return new Promise((resolve) => { |

The thing that supprised me was that the Lambda run time has access to the /proc virtual directory. I guess that makes sense as it is running on Linux.

Having found this I had a play around on my machine to see what information you could get. I found that ls -l returned

1 | lr-x------ 1 sbx_user1051 990 64 Nov 29 17:46 0 -> /dev/null |

After finding this we added some more logging to the lambda to record this before the process finished and what we found was the number of tmp files kept increasing. We now had out smoking gun.

Now that we knew what we were looking for I had a look to check that our code wan’t creating temp files, and we weren’t.

Then I started having a look at the Cloudant couchbackup library that we use. The first thing I noticed was in their package.json they referenced a package tmp, so it looks like they use tmp files. Next, I looked for references to that package and found

1 | function apiDefaults() { |

Ok, so firstly does this get called when we use their backup function and secondaly what does it do? More digging and I found it is aliased app.js and it is called in the backup function.

1 | backup: function(srcUrl, targetStream, opts, callback) { |

Right, so it is called in the path we are using what does it do? Next, I dig into the tmp package.

1 | /** |

The first thing I noticed was on line 15 it is opening a file and then on like 18 is closes the file only if you pass in opts.discardDescriptor === true. That looks like the issue!!

Lastly before raising an issue with Cloudant I wanted to test it, so in our couch-backup lambda I went into the node_modules folder, found the Cloudant code and changed tmp.fileSync().name to tmp.fileSync({discardDescriptor : true}).name and push those changes to production.

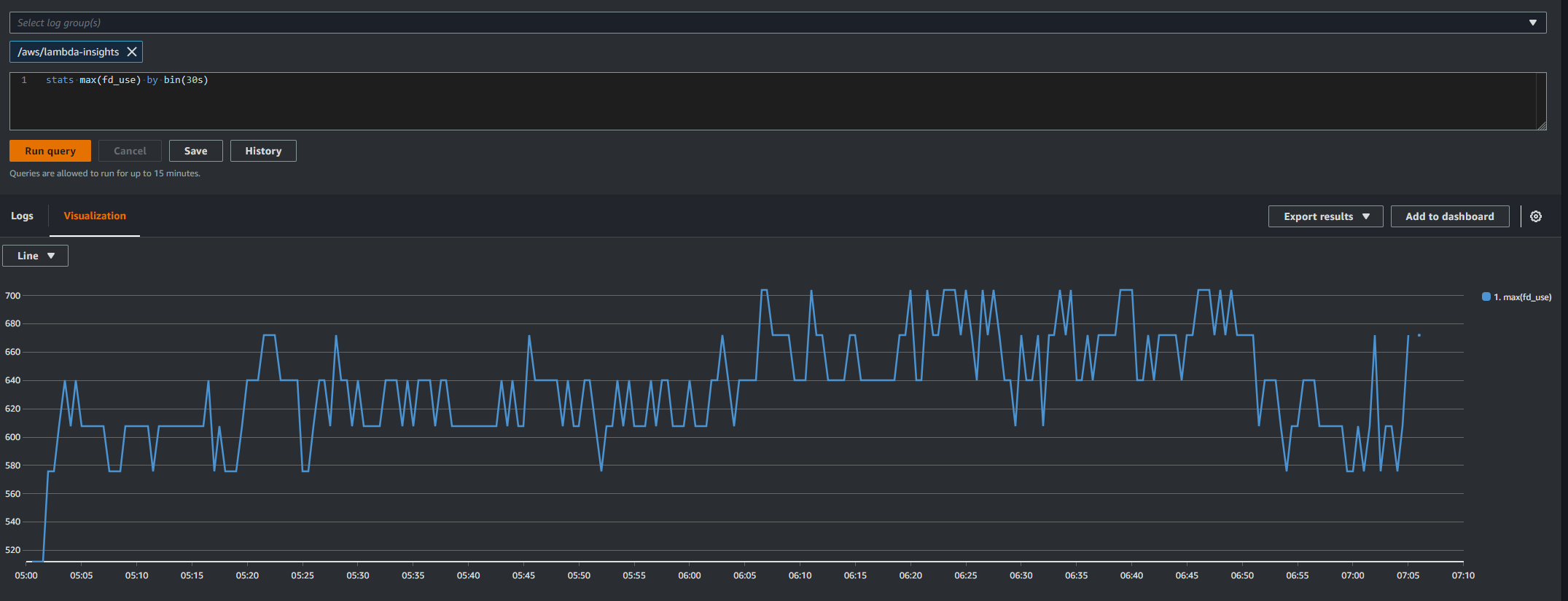

The next day this was the graph from Lambda Insights

Yay!!! We have found the problem.

The last thing was raising an issue with Cloudant File descriptor leak · Issue #535 · cloudant/couchbackup (github.com) and they are implementing what I suggested fix file descriptor leak in backup by emlaver · Pull Request #536 · cloudant/couchbackup (github.com)